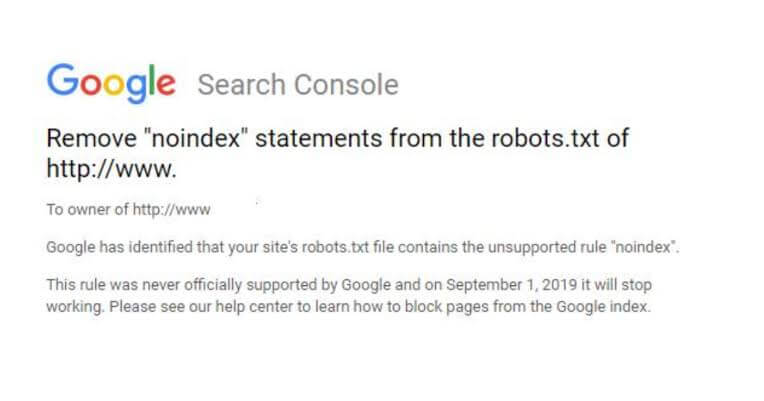

Search Console asks to remove noindex tag from robots.txt file

Western SEO experts began to receive emails from the Google Search Console with a reminder to remove the noindex tag from the robots.txt file. This was reported by Search Engine Roundtable.

In a letter to the site owner, it is reported that the robots.txt file on his site contains the prohibited noindex tag. Google did not support the use of this tag, and from September 1, 2019, this rule will completely stop working.

Some webmasters received a notification even though they removed all the noindex tags a few weeks ago.

SEO expert comment:

“In our company’s practice, the noindex tag was never used in robots.txt files, but was used in the page code to exclude from indexing and looked like this: meta name =" robots "content =" noindex ".

But this tag is already outdated and has not been used in practice for a long time, as it has recently worked 50/50. Webmasters who use the noindex tag in robots.txt in any way are advised to listen to Google messages and eliminate the outdated rule.

Technically, the robots.txt file shows the spider robot what to scan and what not. At the same time, there is still a chance of getting closed pages in the index of search engines.

Recall which directives are used in the robots.txt file:

User-agent *: indicates for which search engine the rules are listed;

Allow: allows crawling and indexing the specified directory;

Disallow: prohibits crawling and indexing of the specified directory;

Sitemap: indicates the location of the sitemap.xml file;

Clean-param: means that the URL contains parameters and you do not need to index them;

"Crawl-delay: sets the speed in seconds at which the robot should scan each page."

Related Blogs

- Search Console Updates: New Bread crumbs Report and Latest Performance Report

- Website Promotion in Search Engines

- Self SEO Site Promotion: Step by Step Instructions

- Search Console Asks to Remove Noindex Tag from Robots.txt File

- Promotion of the Company’s Website on the Internet

- Promotion of Goods and Services on the Internet

- Instagram Launched an International Test to Cancel the Number of Likes

- How to Promote a Site in the Regions

- How to Find and Attract Customers to the Site?

- Google: Domain Age Is Not a Ranking Factor

- How to Live Further with New Updates of Google